Data, Code, Software with Documentation

To fulfill our interdisciplinary research approaches, the Speech Disorders and Technology Lab use primary three modalities of data: tongue and lip kinematic, acoustic, as well as brain imaging (MEG) data. As encouraged by the trend in academia for resource sharing and also partly supported by NIH, we would like to share our data, code, software with documentation to the communities. Most of the data and other resources will be shared step-by-step, through this lab website as well as other public repositories. This page will serve as a portal page with links for shared resources and will be kept updated. These data used in our publications are better ready. Please check this page back for updates.

Tongue and Lip Kinematic Data

Tongue and lip kinematic data mean x, y, z coordinates of sensors attached on tongue and lips during speech production tasks, which is the primary data modality we use in the NIH-funded silent speech interface project. The main goal of this project is to mapping these coordinates to text or speech acoustic, which is the software component of the silent speech interface.

Cao, B., Teplansky, K., Sebkhi, N., Bhavsar, A., Inan, O., Samlan, R., Mau, T., & Wang, J. (2022). Data augmentation for end-to-end silent speech recognition for laryngectomees, Proc. Interspeech, pp. 3653 - 3657.

Teplansky, K., Wisler, A., Cao, B., Liang, W., Whited, C. W., Mau, T., & Wang, J. (2020). Tongue and lip motion patterns in alaryngeal speech, Proc. Interspeech, Shanghai, China, pp. 4576 - 4580.

Kim, M., Cao, B., Mau, T., & Wang, J. (2017). Speaker-independent silent speech recognition from flesh point articulatory movements using an LSTM neural network, IEEE/ACM Transactions on Audio, Speech, and Language Processing, 25(12): 2323-2336.

Alarygneal Speech (Acoustic) Data

Besides kinematic data, we have also collected acoustic data from both laryngectomees and health controls. We are also sharing the alaryngeal speech data, which are helpful in advancing the understanding of alaryngeal speech production, which is understudied.

Cao, B., Sebkhi, N., Bhavsar, A., Inan, O. T., Samlan, R., Mau, T., & Wang, J. (2021). Investigating speech reconstruction for laryngectomees for silent speech interfaces, Proc. Interspeech, Brno, Czech, pp. 651 - 655.

Neuroimaging (MEG) Data

In the brain-computer interface project, we are using Magnetoencephalography (MEG), a non-invasive neuroimaging technique, to collect data from participants during speech perception, covert speech (imagination), and overt speech (loud) tasks. We are also interested in sharing this unique data to the communities. The data are currently in preparation. Welcome more researchers to work on the challenging neural speech decoding task.

Kwon, J., Harwath, D., Dash, D., Ferrari, & Wang, J. (2024). Direct Speech Synthesis from Non-Invasive, Neuromagnetic Signals, Proc. Interspeech, 412-416. (demo audio samples)

Dash, D., Ferrari, P., & Wang, J. (2020). Decoding imagined and spoken phrases from non-invasive neural (MEG) signals, Frontiers in Neuroscience, 14(290), 1-15.

Please send your inquiry about these data above to Dr. Wang.

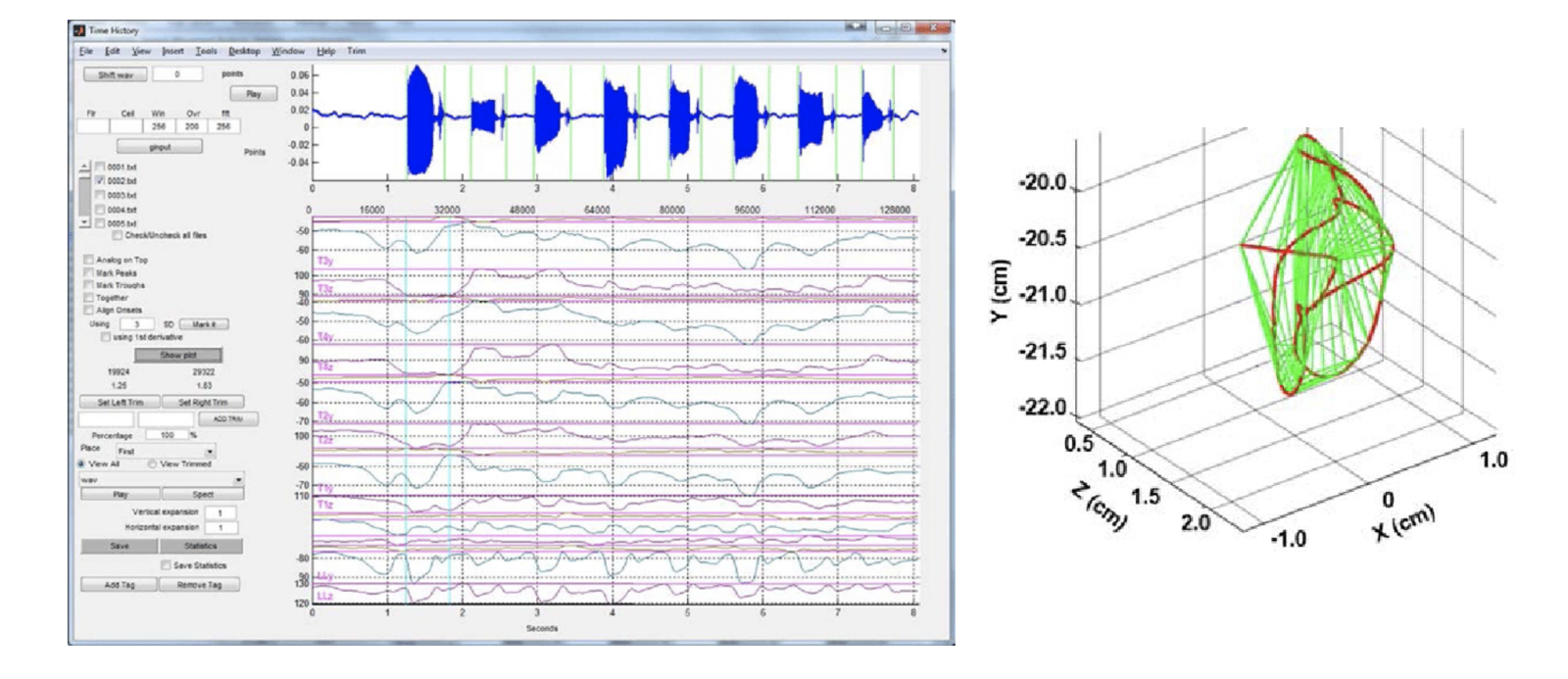

SMASH: Speech Movement Analysis, Statistics, and Histograms

Co-developed with Dr. Jordan Green at MGH and Harvard University, Smash is a Matlab-based software that focused on speech movement (kinematic) data processing, visualization, and analysis (Green, Wang, & Wilson., Interspeech 2013). Please contact Dr. Green for more information about Smash or for obtaining a copy. The code comes with detailed documentation including installation instructions, a user guide, and a developer guide. Although we do not have resources for technical support, feel free to contact Dr. Jun Wang for any general questions.